Why I went with Unraid – and you probably should too

The NAS and home server software market is populated by competing alternatives with profound differences in the way they are designed to operate. Here's why Unraid is—in my opinion—the best solution for most users.

DISCLAIMER: opinions stated here are my own and mine only. If you do try and end up choosing to purchase Unraid through the link at the bottom of this page, I will earn a small commission at no additional cost to you. Do consider using my link if you wish to support my content ;)

Unlike personal computers which basically only exist on Windows, Mac, and in some cases a mainstream flavor of Linux, the NAS and home server space has several competing alternatives available within its own niche in the software market. Back in 2019, MakeUseOf released an article titled "5 Reasons Why Unraid Is The Ultimate Home NAS Solution". Do the points made by the article still hold true in 2023? Here's why the answer is still a resounding "yes".

Is Unraid right for me?

When investing not only vast amounts of money, but also a your precious time and your commitment to a particular software ecosystem, it's important to be aware of the limitations of the specific platform of choice, so you know in advance if you're choosing the right one, whether proprietary or open source.

More specifically, when it comes to building and maintaining a system to archive and consume data, serve media content, websites, applications, or possibly use the same machine as a high-performance personal computer (or more than one) at the same time, there are some essential questions in need of an answer. These should already do much in the way of directing your choice of platform:

- What is the budget?

- How much storage am I going to need?

- Am I going to plan my storage use long-term, or progressively add drives as my need for space increases?

- Am I going to mix-and-match different-sized disks or ones from different brands?

- How much time am I willing to dedicate to the upkeep of the system per week? 20? 5? 1? None—i.e. it should Just Work™ with minimal input?

- How much data loss can I afford—if any at all?

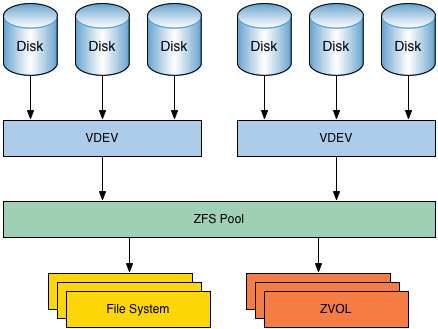

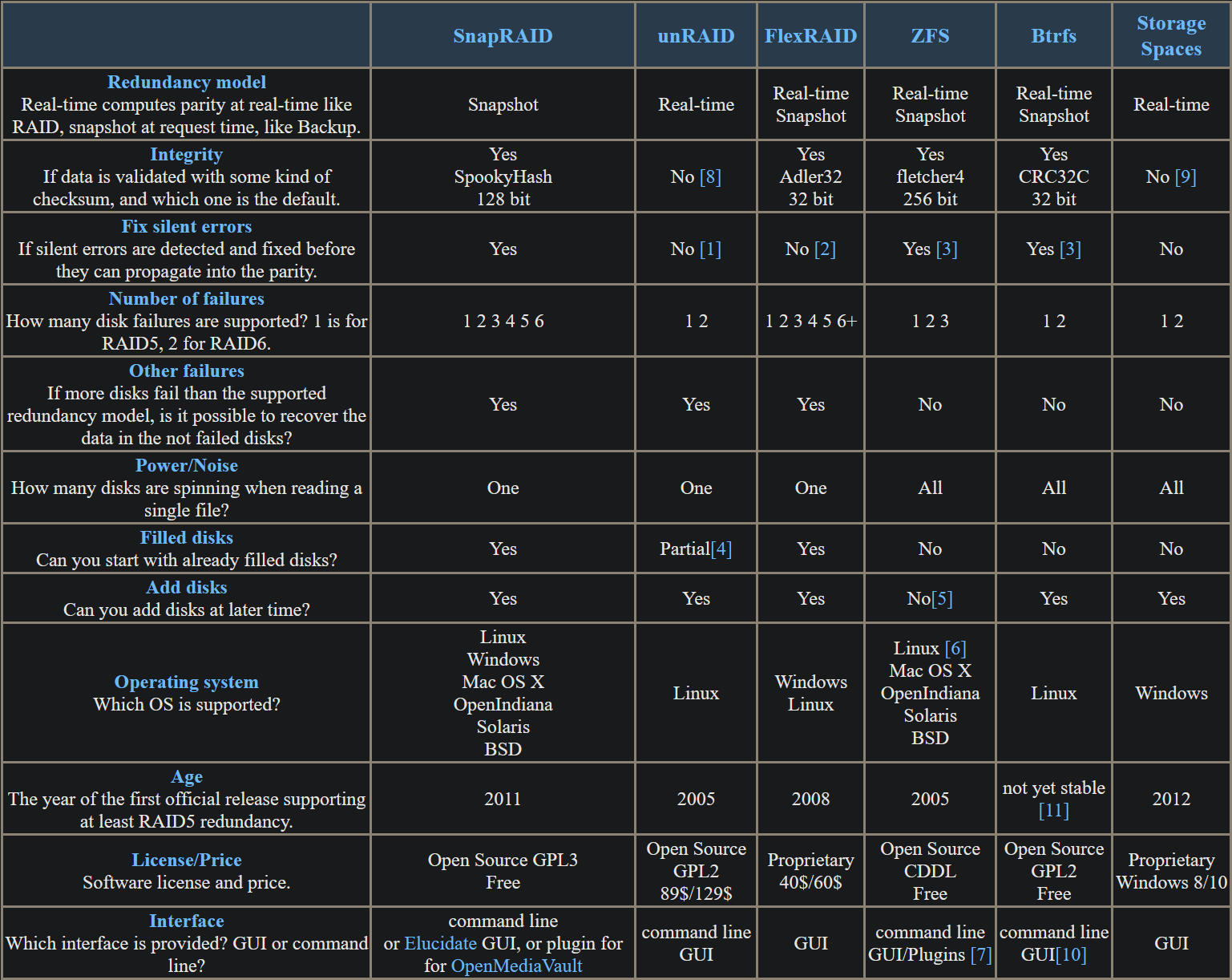

With most solutions, whether commercial or open-source, you're gonna have to use a system like ZFS or BTRFS to manage an array of multiple disks in a "software RAID" configuration (I will not be evaluating "hardware RAID" solutions within the scope of this post). While the performance and stability of BTRFS are debatable when used in its RAID5 mode (the one allowing you to expand storage space and recover from a drive failure), the leading open implementation of ZFS—OpenZFS—is a very established and polished product, to the point where it's used and offered commercially by names such as Amazon Web Services and Hewlett Packard Enterprise, and for good reason.

Its high scalability, reliability and performance, along with the advantages provided by a Copy-on-write filesystem such as the creation of instant snapshots, make it really desirable in the data-center space, especially when dealing with potentially hundreds of terabytes or even more.

So, what about ZFS then?

An optimal solution for very large amounts of data and industrial workloads does not however necessarily translate to a great experience in your average SOHO environment or homelab, specifically when it comes to the ability to scale the storage space and the inherent complexity of its architecture.

This is where—in my opinion—most DIY NAS or server solutions that predominantly rely on ZFS for pooled storage fall flat: you might put a lot of work into configuring your ZFS pool on TrueNAS or OpenMediaVault with, say, three 8 TB drives with single parity (i.e. you can bear up to a single disk failure) and migrating all of your data to a single location, but once you've used up your 16 TB of effective storage (which tends to happen sooner than you think once you start downloading and archiving content—even I thought I would never fill up my first 3 TB drive!), you can't just go out and buy a new 8 TB disk to get 8 TB of extra storage.

To get an extra 8 TB, you'll instead have to create a new vdev (so are called the abstract building blocks that sit below the pool in the ZFS storage model, but above the physical drives) comprised of two 8 TB disks, and still only get 8 TB added to your pool. Say you want to add another 8 TB of space after a year, that's two more 8 TB drives you need to purchase and install in a new ZFS vdev, all for a grand total of 32 TB of usable space, and 24 TB of parity. Over 40% of the space on the seven disks you purchased is unusable. That's a lot of storage!

Of course, you could have gone with five 8 TB drives from the start, used a single parity and, assuming no more than 1 drive fails at a given time (which for our purposes is a fair hypothesis), you'd have 32 TB usable out of a single 40 TB vdev —that's an 80% efficiency, and you have saved the cost of two drives while retaining the same capacity and the ability to recover from any single drive failure. But are you gonna start out with the full 40 TB worth of drives, right away? And if you are the kind of user to need storage in the ballpark of 40 TB, then is it a stretch to imagine that you might one day need to expand past that?

The three main problems with ZFS can be thus summarized by:

- If you want to add any storage to the existing pool, you'll either have to rebuild the entire system, transferring all of your data somewhere else temporarily while you recreate your

vdevand pool and then moving it back, or waste a very large amount of storage each time you scale up - You're still bound to lose everything irrecoverably if any more than a single drive should die, even during a resilver (the process in which data from the missing drive is reconstructed on a replacement spare, and as such one of the most read-intensive tasks your drives will undergo); you can work around this with a higher parity ratio (e.g. dual parity instead of single), but that's even more wasteful as you'll need to give up at least two whole drives just for parity protection in each

vdev - You can't really mix'n'match drives in your

vdev, so if you want to recreate thevdevto fit even a single newer, higher-capacity (read: more cost-effective) drive, you'll still need to relegate your older, smaller drives to a separatevdevand get at least two new drives of the higher capacity. You can't just add a single 16 TB drive to your array of 8 TBs, and to add an extra 16 TB you need to buy two 16 TB disks.

Even though ZFS recently added the support for expanding an existing vdev, it's still not mature tech, and you'll still waste storage since new drives will still respect the existing proportion of space allocated to parity on each drive of your current vdev (say you have two 8 TB drives, so 50% parity each, then adding a new 8 TB will only yield 4 TB of extra space, not 8 TB!). You can easily see why this just doesn't work in most small or medium-scale environments.

What Unraid does differently

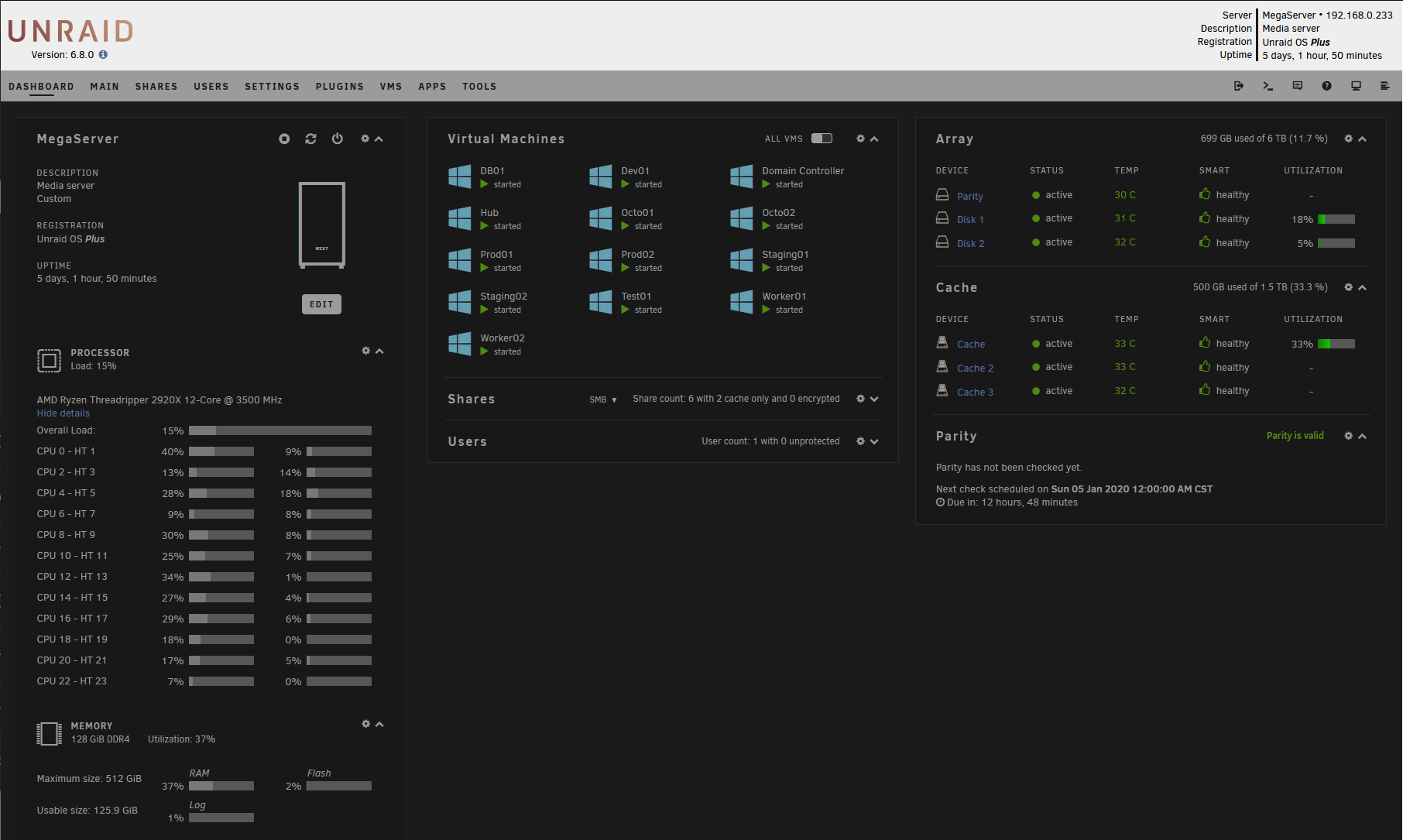

First and foremost, Unraid isn't a filesystem like ZFS or BTRFS; in fact, it's a NAS operating system, a virtualization hypervisor, a platform with a diverse software ecosystem and a user-friendly web interface and a radically different approach to home/office servers.

Using XFS under the hood, Unraid manages an array of independent disks, each with its own separate filesystem and potentially with vastly different capacity, make, model and speeds. Unraid then leverages its own custom FUSE filesystem called shfs to present the entire array of disks as a single unified virtual directory tree, so it looks like all your drives are working together in unison, but in reality a single file is always located on one drive and one only. The filesystem itself is one of the few proprietary components of an otherwise open-source Slackware Linux foundation.

But what about parity? Well, Unraid also manages parity in a unique way: while ZFS and most other RAID5-like systems spread the parity information across the entire array of disks, Unraid instead has a single, dedicated drive just for parity.

The OS can also be configured with dual parity to survive up to two concurrent drive failures, or if you accept the risk you can even start out without any parity and optionally add the parity later on (Unraid will show a big, scary, orange warning sign though). The only limitation is that your data drives cannot be larger than your parity, so the parity drive effectively fixes your maximum capacity for new devices, but there's a procedure to juggle drives around to increase that limit and turn your parity into usable space in the process.

This comes with a few significant benefits:

- You can mix drives of any kind, and add extra space at any time. Need 1 TB more space in your 8 TB array made of two 4 TB disks? Just buy a new 1 TB drive and bam, you got 1000 GB of extra legroom, for a total of 9 TB

- While Unraid can recover from a single drive failure (or two, if you have dual parity), even in the worst-case scenario where more drives fail than your parity can reconstruct you'll still have access to the data on the surviving drives (remember: each drive has its own distinct filesystem and files are automatically distributed across them)

- This approach means that Unraid should have the least vendor lock-in of all other commercial competitors. You can use a tool like mergerfs to recreate the effect of Unraid's filesystem union and mount your array as-is on any Linux operating system without any help from the company behind Unraid itself, but even just mounting the XFS drives on any OS will show your data perfectly fine, just 'scattered'—respecting directory hierarchy and your own preferences—across your drives

In the event of a disk failure, Unraid will immediately notify you (it's possible to set up an automation to receive system messages via email, Telegram, Slack, or a variety of other services), but to the eyes of programs, VMs and other users accessing data on the server, everything will appear to be running smoothly, unchanged: that's Unraid seamlessly emulating the missing device until a replacement unit is installed on the server, and once that's done your system will be good as new, ready to recover from any additional drive failure that might occur.

Not just file storage: how Unraid fares as a general-purpose server or workstation

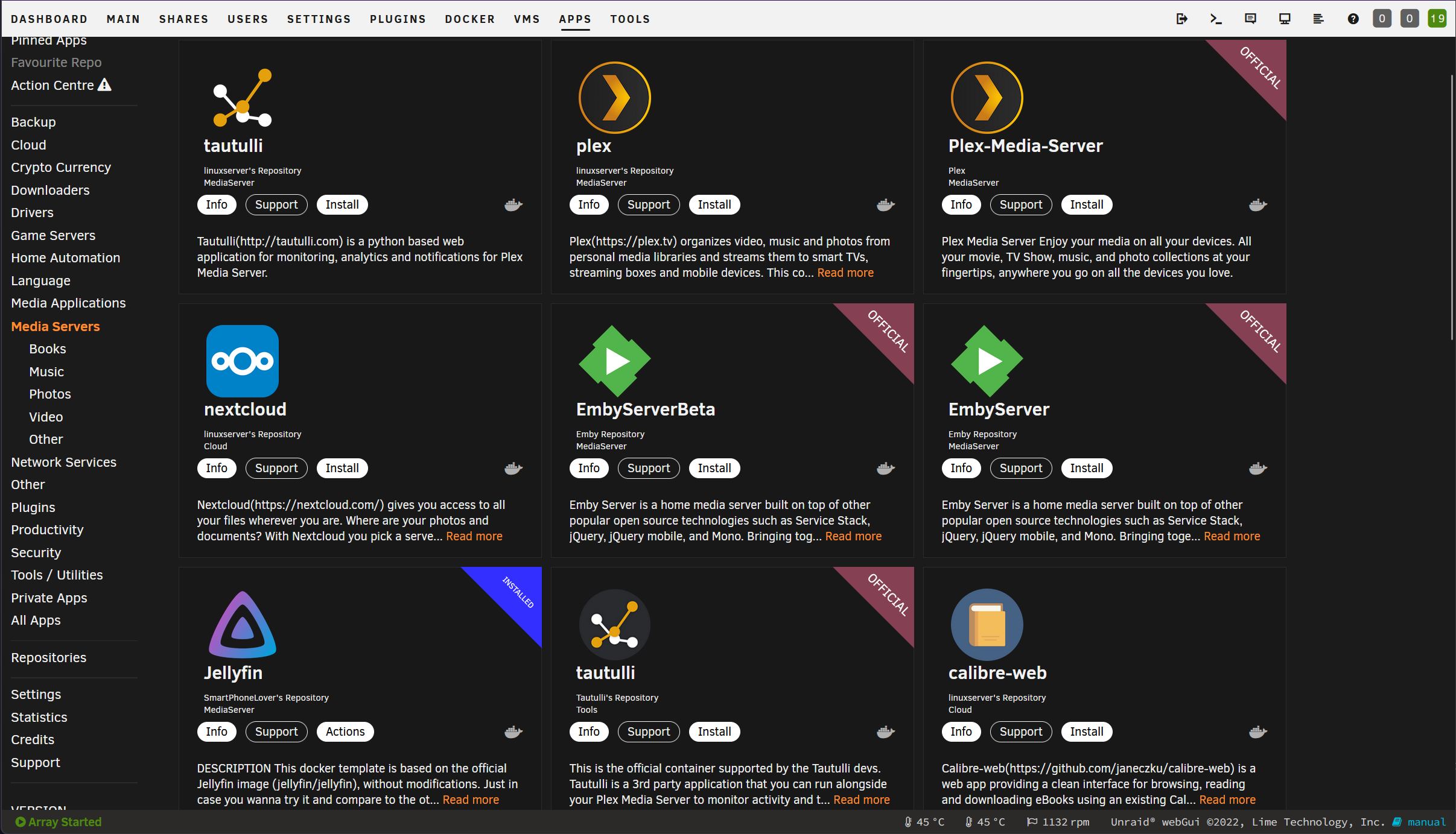

Unraid also has plenty of additional goodies, such as a web interface to manage users, files, preferences, and an "app store" which helps you easily spin up an instance your favorite media streaming applications (Plex, Emby, Jellyfin), home automation software (Homebridge, Home Assistant), and pretty much any service you can think of: if it exists, there's probably a Docker container of it, and Unraid can set it up, deploy it and manage it for you.

You can even easily create virtual machines with Windows, any flavor of Linux, or even macOS if you dare to try. With GPU passthrough working out of the box for both NVIDIA and AMD cards, you can use a single PC tower as both a high-performance home NAS and as a Windows workstation or gaming PC, with near-native performance in graphics-intensive applications, and the experience of directly plugging a monitor into a GPU and USB peripherals being passed to the virtualized OS, without fiddling with VM windows and keyboard/mouse abstraction once everything is set up.

Unraid isn't for everyone

Of course as any other thing in life, Unraid isn't free from flaws, some of which might be deal-breaking depending on your use case:

- The most evident con of Unraid is price: depending on how many drives you need to use, as of the writing of this article a license of Unraid costs a one-time fee of between $59 (6 drives) and $129 (unlimited, although de-facto 28 array drives are supported max). Unraid offers a free trial that can be extended twice after the first month, for up to 2 months of free unlimited use, enough to get your feet wet and figure out if it's the right solution for you

- While Unraid does have an option of using an SSD as a "cache" volume, optionally with BTRFS RAID1 to avert data loss, the caching only applies to files being written to the array, or optionally files that you choose to keep permanently on the SSD. It doesn't cache files to optimize read speeds, which are otherwise what you'd expect from spinning rust drives, if not a bit lower due to FUSE overhead. Write speed is also limited by that of the parity drive(s). What you gain in convenience and safety, you pay for in raw performance

- Also on the subject of solid state storage, support for a full-SSD setup with only e.g. NVMe storage in your array is not officially recognized. Particularly problematic is the use of SSDs as a parity volume, since modern SSDs need to store a "real" filesystem on an OS with TRIM support in order to perform and endure optimally. It's still doable though

- Very weird: Unraid boots from a USB stick you constantly have to keep plugged into the server, although it's hardly used at all once the system is up and the entire image is loaded in memory. The function of the drive is twofold: keep all of your configuration and preferences saved separately from data and applications on the array, and authenticate your Unraid software license, which is unfortunately tied to the USB stick you're using (though it can be moved to a new unit from Unraid's website, in case the pendrive dies or you want to replace it for any other reason)

More alternatives to Unraid

Another option worth considering, if Unraid isn't for you, is one I mentioned earlier: mergerfs is a community-maintained project which allows you to create a virtual filesystem from the union of multiple "real" filesystems. While it doesn't have the same parity and missing device emulation functionality as Unraid, it's still a valid option, especially when combined with the parity system offered by SnapRAID, which brings the experience and feature set of OpenMediaVault closer to what you can get on Unraid, for free.

The main disadvantage to using SnapRAID with mergerfs on OMV, aside from having to set everything up more manually and not being a turnkey solution like Unraid, is that parity isn't updated in real time, and the system instead relies on periodic syncs ("snapshots") to keep data protected. This means that if your files were modified since the last snapshot, you can forget 1:1 perfect recovery of all data in the event of a failure, since depending on how much has changed on any drive (even minute changes that unfortunately happen to align with critical data), some of the data from the bad drive might not come back at all.

On the other hand, if you primarily care about only using tools you're highly familiar with, and that happens to be the Windows way of doing things, Microsoft also has a solution worth exploring, in the form of Storage Spaces. Like Unraid it offers real-time redundancy, which is a huge pro, but a careful examination of Windows OS features like Storage Spaces is far past the scope of this article.

Also funny enough, if you're still undecided between Unraid and ZFS and want to try both, as of Unraid 6.12 the use of ZFS is also officially supported and works out of the box on user-created drive pools that exist alongside your 'traditional' Unraid array and cache, if for some reason you want to do that.

If this post sold you on Unraid, then give the free trial a spin, and if you like it please consider using my affiliate link to purchase a license, obviously at no additional cost to you :) https://unraid.net/pricing?via=1a2b47